Specifies what Tensors should be optimized. model_parameters: Optional: An iterable of torch.Tensors or dicts. This overrides any optimizer definition in the DeepSpeed json config. model: Required: nn.module class before apply any wrappers optimizer: Optional: a user defined Optimizer or Callable that returns an Optimizer object.

Arguments: args: an object containing local_rank and deepspeed_config fields. Module = None, optimizer : Optional ] = None, model_parameters : Optional = None, training_data : Optional = None, lr_scheduler : Optional ] = None, mpu = None, dist_init_required : Optional = None, collate_fn = None, config = None, config_params = None ): """Initialize the DeepSpeed Engine. def initialize ( args = None, model : torch. micro # Export version information _version_ = version _version_major_, _version_minor_, _version_patch_ = _parse_version ( _version_ ) _git_hash_ = git_hash _git_branch_ = git_branch

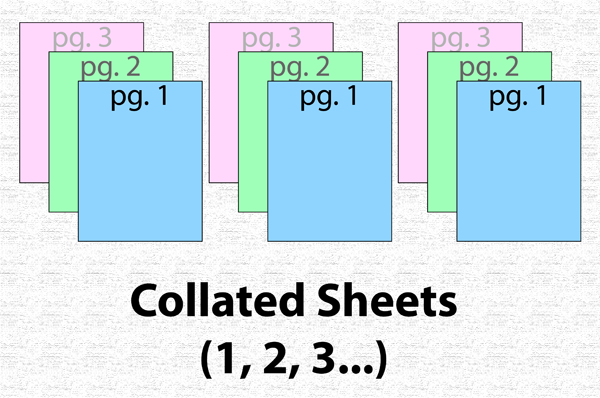

Def of collate Patch#

git_version_info import version, git_hash, git_branch def _parse_version ( version_str ): '''Parse a version string and extract the major, minor, and patch versions.''' ver = pkg_version. runtime import DeepSpeedOptimizer, ZeROOptimizer from. module_inject import replace_transformer_layer, revert_transformer_layer from. ops.transformer import DeepSpeedTransformerLayer, DeepSpeedTransformerConfig from.

runtime.activation_checkpointing import checkpointing from. nfig import DeepSpeedConfig, DeepSpeedConfigError from. runtime.lr_schedules import add_tuning_arguments from. inference.engine import InferenceEngine from. runtime.engine import ADAM_OPTIMIZER, LAMB_OPTIMIZER from. runtime.engine import DeepSpeedEngine, DeepSpeedOptimizerCallable, DeepSpeedSchedulerCallable from. ''' Copyright 2020 The Microsoft DeepSpeed Team ''' import sys import types from typing import Optional, Union import torch from torch.optim import Optimizer from _scheduler import _LRScheduler from packaging import version as pkg_version from.

0 kommentar(er)

0 kommentar(er)